Fast development or future-oriented development?

Web Development

In the world of development, I’m all about achieving maximum performance with minimal resources, all while keeping a keen eye on scalability. That’s why microservice architecture has become my go-to choice. By automatically scaling Docker resources to meet global demand, the hassles of server expansions and co-location become a thing of the past.

Cloud platforms like AWS and GCP have proven far more reliable than traditional bare-metal servers, not to mention their cost and resource efficiency advantages. These platforms are the perfect playground for creating a true DevOps environment, where development and operations seamlessly integrate.

For me, it all boils down to creating the smallest, most efficient services possible. I’m building a global service, and the initial architecture isn’t my primary concern. Just like any web service, bottlenecks can appear regardless of the structure. Minimizing these bottlenecks is crucial, just like maximizing multi-core or GPU usage in game development. Speed, after all, is the holy grail of the web.

As a startup, we can’t ignore business factors either. Cost, schedule, security, localization, and legal issues all come into play. From a cost perspective, the cloud (or IaaS) provides the flexibility of auto-scaling, eliminating the need for upfront server investments.

While AWS Elastic Load Balancing is a powerful tool, it can be a bit pricey for startups. I’ve been using Google Cloud Platform for about three years as a cost-effective alternative and have recently dived into Docker orchestration with Kubernetes.

Google’s investment in microservices is impressive, and Kubernetes, despite a slight learning curve, has proven to be incredibly efficient. Scaling replicas up or down with YAML is lightning fast, and managing Docker’s private repositories is a breeze. The process of version control distribution through YAML files has been smooth sailing so far.

Even hot fixes take less than 10 seconds, with the ability to destroy and recreate pods, rolling updates with minimal connection disruption, and seamless GitLab CI integration. A simple Kubernetes command triggers a rolling update after running test cases and deploying the latest tag in the repository.

At the heart of development is the user. UX and functionality are paramount, but speed is a major factor in user satisfaction. While my wife, my most trusted QA tester, assures me our service is fast, the real challenge is maintaining that speed as the user base grows.

Rapid prototyping, however, can be tricky. With my trusty tools like Spring Framework, MyBatis, JSTL, and Velocity, I could easily churn out a quick build. But that kind of hardcoding doesn’t align with modern trends and could lead to future bottlenecks.

So, I’ve embraced Play Framework with Scala, which excels at asynchronous operations. Even though RDBs aren’t inherently asynchronous, libraries like Slick enable asynchronous interactions with MySQL without disrupting the database structure.

This approach ensures a responsive backend, and if tasks pile up, container allocation automatically increases, allowing for easy load balancing and scalability. Meanwhile, DB replicas handle inserts and selects separately, preparing for future growth. GCP’s intuitive UI simplifies DB replica management.

Our service leverages a Play Framework backend and a Node.js frontend with Express and AngularJS, all easily packaged into containers and automatically load balanced.

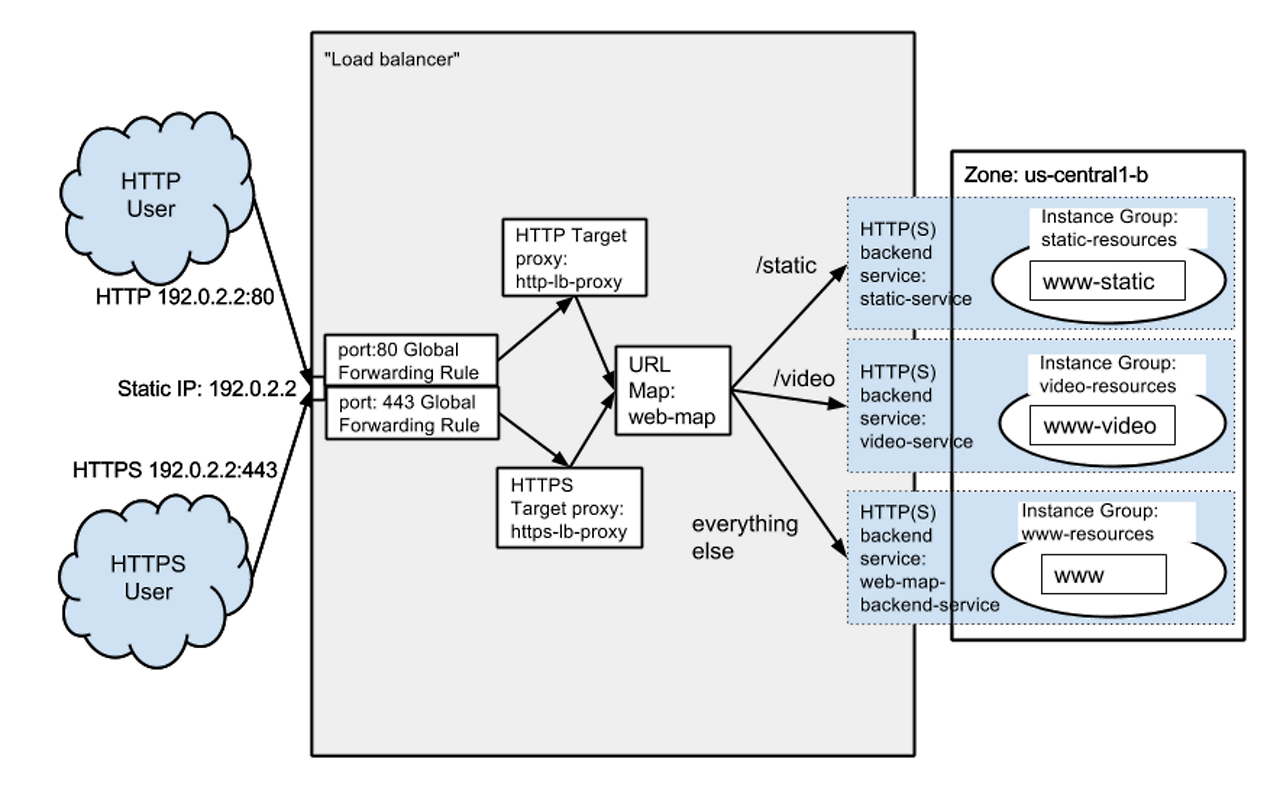

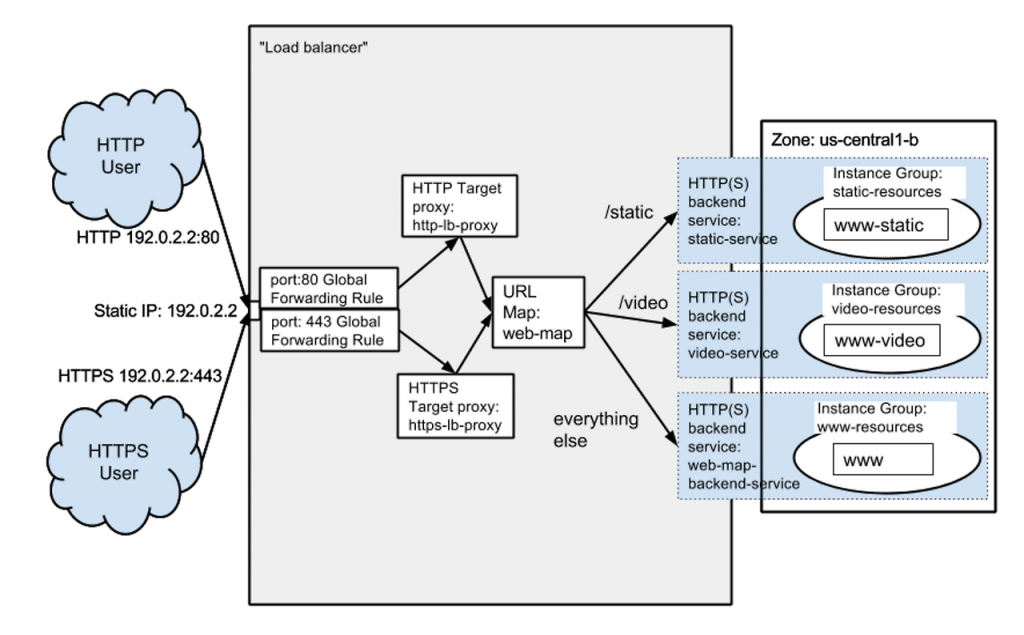

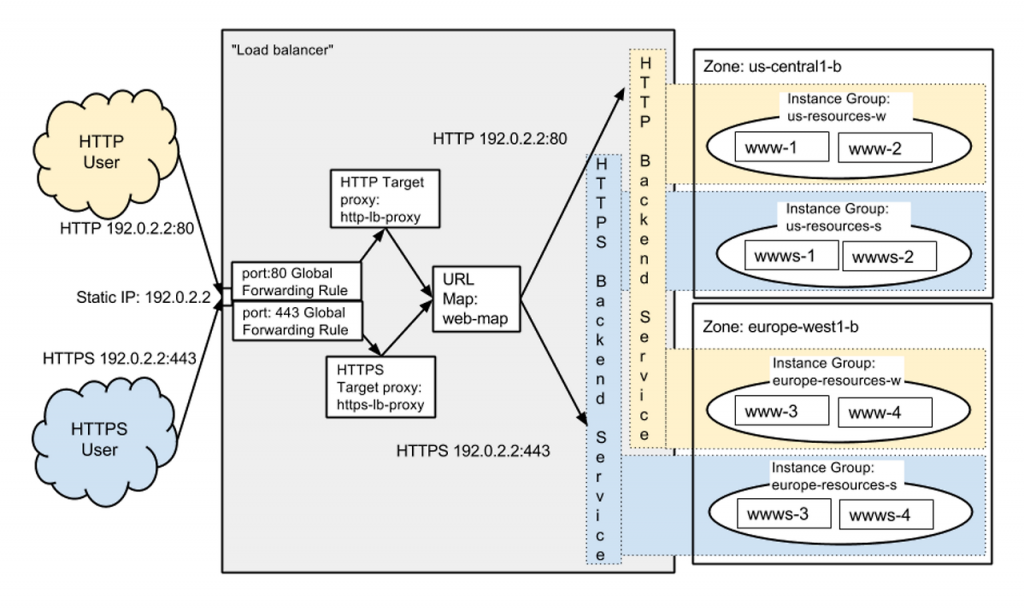

The challenge now lies in global load balancing. Kubernetes and GCP’s UI don’t offer a fully automated solution. GCP’s Cloud CDN is a step forward, but it still proxies to the region’s IP even with multiple instances.

I’m quite fond of this structure, but it has its drawbacks. Kubernetes’ automatically generated load balancer doesn’t support HTTP(S) load balancing based on TCP (layer 4). Achieving this requires pulling layer 7 (HTTP protocol) into Kubernetes’ service, and a workaround remains elusive. Security considerations also demand SSL implementation within the HTTP protocol, but whether it should reside in the load balancer or the backend (Netty for backend, Node.js for frontend) is still a debate.

The key is to prioritize future-oriented development, but milestones can get derailed as challenges arise. Rapid development is crucial for startups, but stability is equally important for a successful launch. Striking a balance between the two requires ongoing learning and adaptation.

Unexpected paperwork and business hurdles can be just as time-consuming as future-proofing development. While not everything can be anticipated, stability ultimately trumps all. The same goes for paperwork – it’s a necessary evil, and sometimes, it’s better to over-prepare than under-prepare.

As a developer and DevOps enthusiast, my mission is to deliver stable and fast services, even amidst the constant evolution of the tech industry. Despite the challenges and uncertainties, the pursuit of excellence drives me to create the best possible user experience.